I've read that after so many read/write cycles, they can stop working properly. Is there such a thing as preemptively replacing them? I just ordered a 1TB Crucial to replace the 500GB Crucial I installed in 2016, not for that reason, but because there is only 19GB space left after deleting everything I reasonably could. And they are only $48. Can I put the old one into my laptop, or is it near the end of it's life?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Should a SSD be replaced after X years?

- Thread starter atikovi

- Start date

if you ask WD... they say life is only 3 years... take that for what it's worth.....

they wanna sell more drives of course...

they wanna sell more drives of course...

HWInfo or the manufacturer appropriate app will tell you the health of your SSD.

I've had a only a handful of SSDs fail over the past 10 years, they have far outlasted the mechanical drives I have owned since the late 1980s. When they do fail though, its almost always catastrophically with little chance of recovery, so plan accordingly.

I've had a only a handful of SSDs fail over the past 10 years, they have far outlasted the mechanical drives I have owned since the late 1980s. When they do fail though, its almost always catastrophically with little chance of recovery, so plan accordingly.

- Joined

- Dec 7, 2012

- Messages

- 3,566

You can usually run some software that'll give you health statistics on the drive. Intel, Samsung, Toshiba, WD, etc. -- they all have software that'll tell you health and sometimes write cycles.

Also you could use Crystal Disk Info, that should also pull this info.

I'd go by health reported by the software and potentially check write cycles and compare them to the manufacturer's rating. Usually MTBF (mean time between failures) for consumer SSDs used in consumer environments is very high.

Now... go slap a consumer-grade SSD in a server and throw a SQL database on it... good luck. In that case there's enterprise-grade SSDs. As with anything, quality is basing your purchase price.

Also you could use Crystal Disk Info, that should also pull this info.

I'd go by health reported by the software and potentially check write cycles and compare them to the manufacturer's rating. Usually MTBF (mean time between failures) for consumer SSDs used in consumer environments is very high.

Now... go slap a consumer-grade SSD in a server and throw a SQL database on it... good luck. In that case there's enterprise-grade SSDs. As with anything, quality is basing your purchase price.

atikovi

Thread starter

This one has a 5 year warranty.if you ask WD... they say life is only 3 years... take that for what it's worth.....

they wanna sell more drives of course...

I have an old Crucial SSD in service now for about 10 years as the OS drive on a Linux desktop system that is used daily. Since it's just the OS drive, I will run it to failure since I won't lose any data. Still waiting for that to happen. Meanwhile no noticeable loss in performance. This system uses cron to trim the SSD filesystem nightly or weekly (can't remember which).

If this SSD had user data on it, I would have replaced it when the software diagnostics said it reached 100% of its life, which was a few years ago.

You should keep SSDs below 75% capacity for best performance and lifespan.

If this SSD had user data on it, I would have replaced it when the software diagnostics said it reached 100% of its life, which was a few years ago.

You should keep SSDs below 75% capacity for best performance and lifespan.

I run Crystal DiskInfo regularly. My 2019 laptop's SSD is at 96% life remaining after 4 years of very light use. My desktop's SSD* is 2.5 years old and at 95%. The SSDs are for the OS and the programs, and HDDs are for storage along with multiple backups.

*I only went with a meh Crucial P2 because I knew that the computer would be replaced within a few years. I installed the SSD as a mid-life refresh. It only has to last for two more years but it should last much much longer. It may outlast me.

*I only went with a meh Crucial P2 because I knew that the computer would be replaced within a few years. I installed the SSD as a mid-life refresh. It only has to last for two more years but it should last much much longer. It may outlast me.

| Life Expectancy (MTTF) | 1.5 million hours |

| Endurance | 600 Total Bytes Written (TBW) |

Last edited:

The real trick would be to mirror the drive. Not sure if that option is available for home computers. We did that at work for crucial data. Essentially you run two identical drives. Chances of two failing at the same time would be very small.

I administer various enterprise flash and hybrid flash SAN's at work.

I have yet to see a flash module fail. The spinning ones fail from time to time.

On the desktop side the guys have had some of the m2 modules fail, but that is a difference between a $90 part and a $2000 part.

I have yet to see a flash module fail. The spinning ones fail from time to time.

On the desktop side the guys have had some of the m2 modules fail, but that is a difference between a $90 part and a $2000 part.

I've read that after so many read/write cycles, they can stop working properly. Is there such a thing as preemptively replacing them? I just ordered a 1TB Crucial to replace the 500GB Crucial I installed in 2016, not for that reason, but because there is only 19GB space left after deleting everything I reasonably could. And they are only $48. Can I put the old one into my laptop, or is it near the end of it's life?

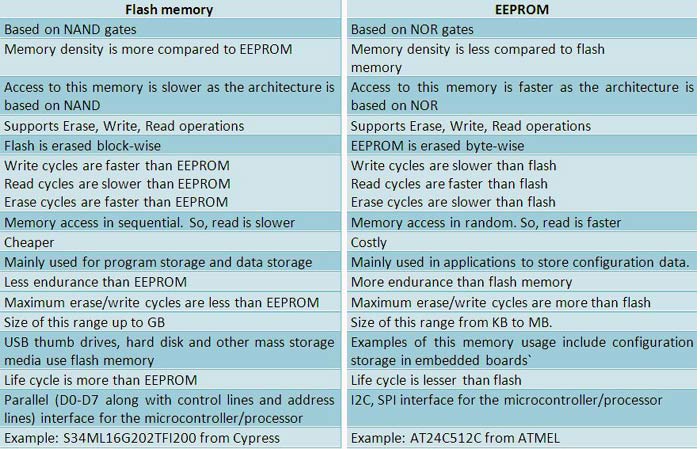

Reads are easy. It’s cumulative erase cycles that cause wear, along with writes. Flash EEPROM needs to be blasted via high voltage to a default state for an erase, and then erased blocks can be programmed/written. Each erase/write damages the insulator a little bit and it gets more difficult to read, which is where error correction comes in. Enter error correction can help, but that’s baked into the hardware.

They have some good sales running on SSD's I just saw a 2G one yesterday for $169. 7000 bit read rate.

I know enough about flash EEPROM to be dangerous. It's erased with that high voltage I mentioned, but this is only for an entire block. That put it in the default state where it can be programmed, but it causes damage each time. And programming tunnels a charge into a "floating gate" and that results in a little bit of breakdown of the gate.

I've got a WD Blue 1 TB SATA SSD that I've had since 2019 and in four years it's still doing fine in a computer I use pretty much every day. Most of it is for bulk data and backups, so those might result in massive amounts of writes, where eventually blocks have to be erased.

There are different grades. I've got the WD Blue, but there's also the newer WD Blue SA510, which I've heard might have some issues with reliability. Crucial's seems to be the MX series vs the cheaper GX.

But of course the most important thing to do is have a backup. Having a backup has saved me grief several times when I recovered from it.

I've got a WD Blue 1 TB SATA SSD that I've had since 2019 and in four years it's still doing fine in a computer I use pretty much every day. Most of it is for bulk data and backups, so those might result in massive amounts of writes, where eventually blocks have to be erased.

There are different grades. I've got the WD Blue, but there's also the newer WD Blue SA510, which I've heard might have some issues with reliability. Crucial's seems to be the MX series vs the cheaper GX.

But of course the most important thing to do is have a backup. Having a backup has saved me grief several times when I recovered from it.

atikovi

Thread starter

Is the Crutial I bought a good deal? The same thing I bought 7 years ago but half the size was $120.They have some good sales running on SSD's I just saw a 2G one yesterday for $169. 7000 bit read rate.

Is the Crutial I bought a good deal? The same thing I bought 7 years ago but half the size was $120.

Those are great. I purchased those and the previous version, MX300, for work. As far as I remember, no MX500 has failed yet. A few BX200s from longer back have failed and some older MX300s were showing severe signs of degradation but all MX500s and MX300s from 2018 and newer have not failed.

In fact, no SSD at work has failed since 2018 and none of the OEM Samsungs in the HP Probook/Elitebooks have ever failed since 2016.

atikovi

Thread starter

Also you could use Crystal Disk Info, that should also pull this info.

What is it saying?

EEPROMS are different that flash memory used in other things but I am to lazy to google how much it is.I know enough about flash EEPROM to be dangerous. It's erased with that high voltage I mentioned, but this is only for an entire block. That put it in the default state where it can be programmed, but it causes damage each time. And programming tunnels a charge into a "floating gate" and that results in a little bit of breakdown of the gate.

I think that SSDs and other flash memory devices don't actually erase the data ie setting all bits back to 0s or 1s. I think when you delete something or format them it just tells the controller that x block of memory is avaliable to be written to again.

What is it saying?

It's saying you got your money out of this drive. 35k hours runtime is a lot! I'm not sure how much longer it will run at 83% good..

EEPROMS are different that flash memory used in other things but I am to lazy to google how much it is.

I think that SSDs and other flash memory devices don't actually erase the data ie setting all bits back to 0s or 1s. I think when you delete something or format them it just tells the controller that x block of memory is avaliable to be written to again.

Flash is really "flash EEPROM", which is a type of EEPROM that is erased in entire blocks.

Difference between Flash Memory and EEPROM?

EEPROM is used for applications with occasional updates, while Flash memory is suitable for frequent data updates and higher storage capacity

www.electronicsforu.com

www.electronicsforu.com

Flash memory is a type of electronically-erasable programmable read-only memory(EEPROM), but it can also be a standalone memory storage device such as a USB drive.

And absolutely blocks get erased. The idea is to get all the trapped charge in the "floating gate" out of there by zapping it. They have to be erased in order to program them, which involves injecting a precise amount of charge in the floating gate to change it's threshold voltage - the voltage that turns it on. I remember working with older flash where it was just one bit per transistor, and they could theoretically be written again without erasing. A block erase was always to all 1s. They could only be programmed to 0. So if a bit was still a 1, it could be programmed to 0, but if it was a 0 it could only stay at 0 until the block was erased. There were creative ways to use it where they didn't need to be erased.

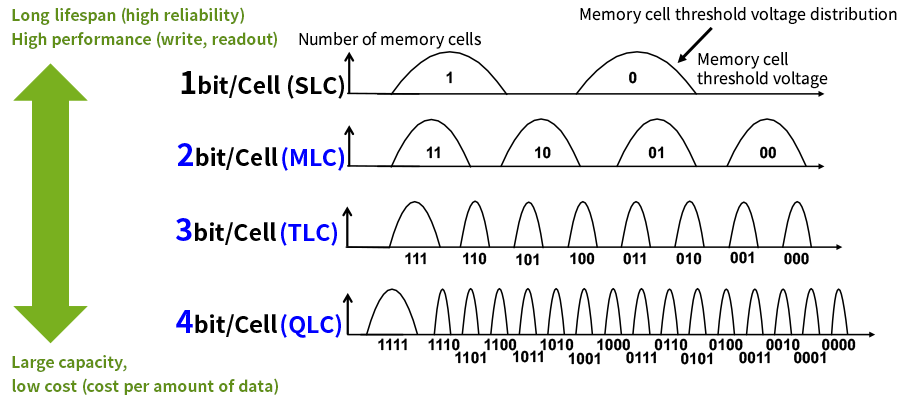

As far as programming - that's pretty convoluted these days because single-level is no longer the standard except maybe for a high endurance cache. But the key is that it's not really programming the cell to sample an output like with SRAM or DRAM, but to program the threshold voltage that turns on a specific transistor. Then the voltage to turn on the transistor is tested. With single level, the goal is to program it for two different threshold voltages. With multi-level (2) it's four. With tri-level (3) it's eight. And with quad-level (4) it's sixteen. It's actually quite convoluted how this works and the more threshold voltages there might be programmed, the more complicated it is to test. When there are errors, there are different ways to adjust the thresholds to sample again. I don't fully understand all that stuff. I only understand this from a relatively high level. But there's less margin of error for more bits per cell.

There are all sorts of issues with accuracy as the insulator in a floating gate is damaged from erasing and programming. There's also the issue of charge in the floating gate leaking, which can be temperature dependent.

This shows the target threshold voltage for different cell capacities of flash.

It's saying you got your money out of this drive. 35k hours runtime is a lot! I'm not sure how much longer it will run at 83% good..

Once they start wearing more and more, it may require more error correction, which then slows down reads.

Similar threads

- Replies

- 159

- Views

- 6K

- Replies

- 14

- Views

- 3K