Originally Posted By: Shannow

Originally Posted By: 555

Person behind the wheel at fault.

Driver is always supposed to be observant and attending to the controls. How does one not see a fire truck and slow down?

There's a hundred years of experience in industrial automation that says that your attitude towards the driver's responsibility is unfounded, as soon as you start removing the process from them.

Re read my previous post...when something else is doing most of the thinking, once alerted the operator takes MUCH longer to be come aware of the problem, analyse and take effect.

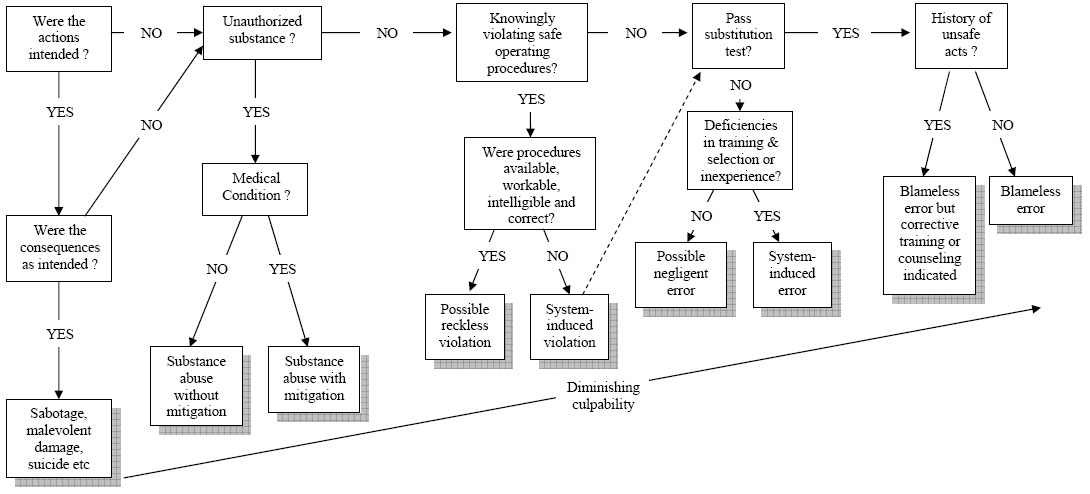

https://www.amazon.com/Managing-Risks-Organizational-Accidents-Reason/dp/1840141050

Good book for anyone to read before blaming the operator.

Remember, the semi, Teels blamed the open area under the load for their car not "seeing it"...there's no excuse for them in this case (until they come up with one).

Are Tesla using everything they could (e.g. LIDAR), before blaming the operator, who THAY say is fully in control ?

I agree with you and the problems that arise from removing the operator from the process.

This situation is a slippery slope legally because of shifting responsibilities. Maybe by keeping the operator at the center of legal responsibility the appeal of this technology will be limited or drivers will start paying attention......I know I know....they won't .

I'm bummed that so many vehicle owners would prefer not to drive their purchase. What is better than controlling your own destiny?

This technology could provide mobility for those that otherwise would have none. I see that as one of its greatest benefits.

If a driver, operator, "bag of plasma with a wallet" doesn't have to focus on driving then odds are they will be looking at a screen, whether on the instrument panel or mobile device. If the driver is looking at that then one can sell them stuff and/or "drive" them to where the desired product is located. So now that vehicle you're making payments on for the next 5 years is also a stream of digital junk mail i.e." Mr Johnson we at Cheapo Rim Protectors have noticed that you have driven on your tires for more than 40,000 miles and today we have a sale on some tires we think you'll like better" etc. That's why I want the operator to be responsible, because maybe just maybe, there will be some legal ground to keep this sort of marketing distraction on a leash.

Going to buy that book. Looks like my type of reading. Thanks for the recommendation.